AI Content Overload: How to Spot and Protect Against Neural-Slop

By 2025, AI-generated content floods feeds, blurring truth and fueling scams. Learn to spot neural-slop, protect yourself, and teach loved ones practical media literacy to stay safe online.

Artificial intelligence has moved from a novelty to a daily reality online. By late 2025, AI generated images and videos can look almost indistinguishable from real ones, forcing us to rethink online trust.

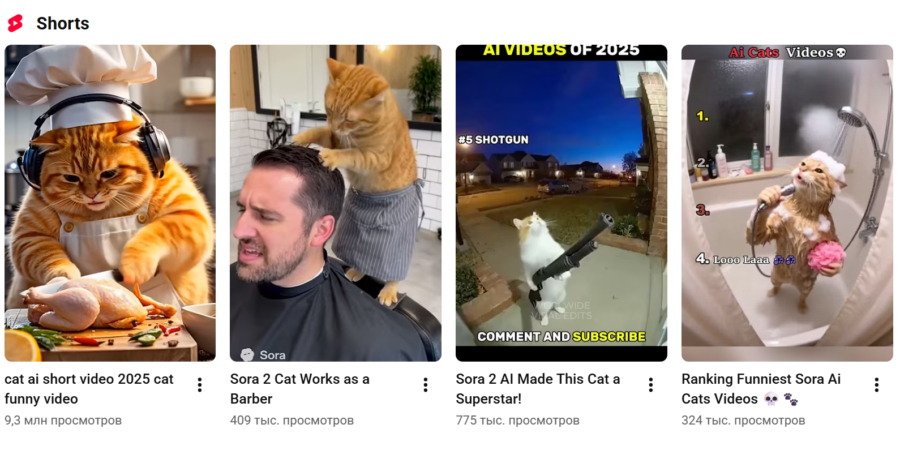

Social feeds are filled with fake trailers and imagined scenes from films and TV shows — created by algorithms chasing engagement. You might stumble upon previews for upcoming blockbusters or endings to popular series that never happened, and many times these are not labeled as AI made. People share them, boosting reach and guiding recommendations without realizing the truth.

Even photos can be very convincing: AI systems recreate lighting, textures, and tiny details so well that a casual glance may not reveal anything artificial.

AI content now spreads through the internet at speed. It is not just fan made jokes — the flood includes numerous videos and memes that replace real content, turning feeds into a sea of synthetic visuals.

And even watermarks or branding like Sora's watermark cannot guarantee authenticity, as many users may not recognize or trust those marks.

What the term neural-slop means

Experts describe a phenomenon called neural-slop (a term used to describe a rapid stream of AI generated content designed to maximize views rather than provide meaningful information) as the shift from curated entertainment to a flood of AI made content. The top layer is flashy but often shallow; beneath it, a flood of meaningless material grows.

Neural-slop is not just about trailers or funny photos. It is a constant flow of low quality AI content produced for volume, not value. People scroll through it without learning or retention, while the online space becomes noisier and less memorable.

How scammers exploit AI content

When people stop distinguishing between real and fake, scammers use AI to create convincing images and short videos that pressure viewers. A common technique involves fake emergencies, medical requests, or urgent help messages that prompt quick actions.

We have seen scenarios where a deepfake of a famous person appears to ask for donations or to approve a transfer. The realism of AI makes these schemes harder to detect, since faces, lighting, and expressions look authentic.

The danger is that better AI means fewer obvious telltale signs: no odd artifacts or impossible proportions. The best defense is human vigilance: question everything, verify with independent sources, and do not trust an image just because it looks real.

If neural-slop can be ignored, the risk remains: false realities can manipulate decisions, and people may miss important facts or fall for wrongdoing. The sooner we judge evidence critically, the less likely we are to become part of someone else s scheme.

Reducing the impact of neural content

Today, the so called junk AI content spreads at an alarming rate. Every reaction, share, or even comment signals the algorithm to show more of it. To curb the harm, try these simple steps:

- Do not share questionable images or videos, and avoid liking them. Even if a clip is shocking or funny, your action helps it grow in the feed. Often it is best to simply move on.

- Check sources. Do not trust first impressions. Look for official news outlets, verified accounts, or confirmations from other people involved. If nothing corroborates it, pause and think.

- Develop a habit of skepticism. In a world where any image or video can be AI generated, trusting what you see is risky. Ask yourself: is this real? Who verified it? Can I confirm it elsewhere?

Protecting loved ones from AI scams

Scammers already use AI widely, and many people do not realize how easily photos or videos can be faked. Share these tips with friends and family who may be vulnerable:

- Explain that any image or video can be created with AI — this is easier than ever. Emphasize that a convincing clip with a well known person or dramatic event is not necessarily real.

- Show examples of how a clip could be generated with different people and scenarios. This helps illustrate how easy it is to mislead visually.

- Remind them of a simple rule: when a message or video triggers alarm, pause before reacting. Do not act on impulse.

- Encourage direct contact with the person involved or other sources to verify claims before sharing or sending money.

Staying attentive, thinking critically, and fact checking remain the best defenses against AI risks. In a world where visuals no longer guarantee truth, a habit of doubt helps preserve sound judgment.

Expert comment

Expert note: Digital security researchers warn that visual authenticity is no longer proof of truth; verify with independent sources and question every claim. A disciplined approach to checking information keeps audiences safer online.

Summary

AI generated content continues to flood feeds, challenging how we discern real from fake. The rise of neural-slop shows that volume often trumps value, making media literacy essential. By practicing careful verification and sharing responsibly, readers can protect themselves and others from deception.

Key insight: In a world flooded with AI made visuals, verify before you share, for truth depends on thoughtful checking rather than first impressions.

Explore useful articles in Tech News as of 19-12-2025. The article titled " AI Content Overload: How to Spot and Protect Against Neural-Slop " offers in-depth analysis and practical advice in the Tech News field. Each article is carefully crafted by experts to provide maximum value to readers.

The " AI Content Overload: How to Spot and Protect Against Neural-Slop " article expands your knowledge in Tech News, keeps you informed about the latest developments, and helps you make well-informed decisions. Each article is based on unique content, ensuring originality and quality.