Anthropic and OpenAI Forge Landmark AI Safety Partnerships with U.S. Government

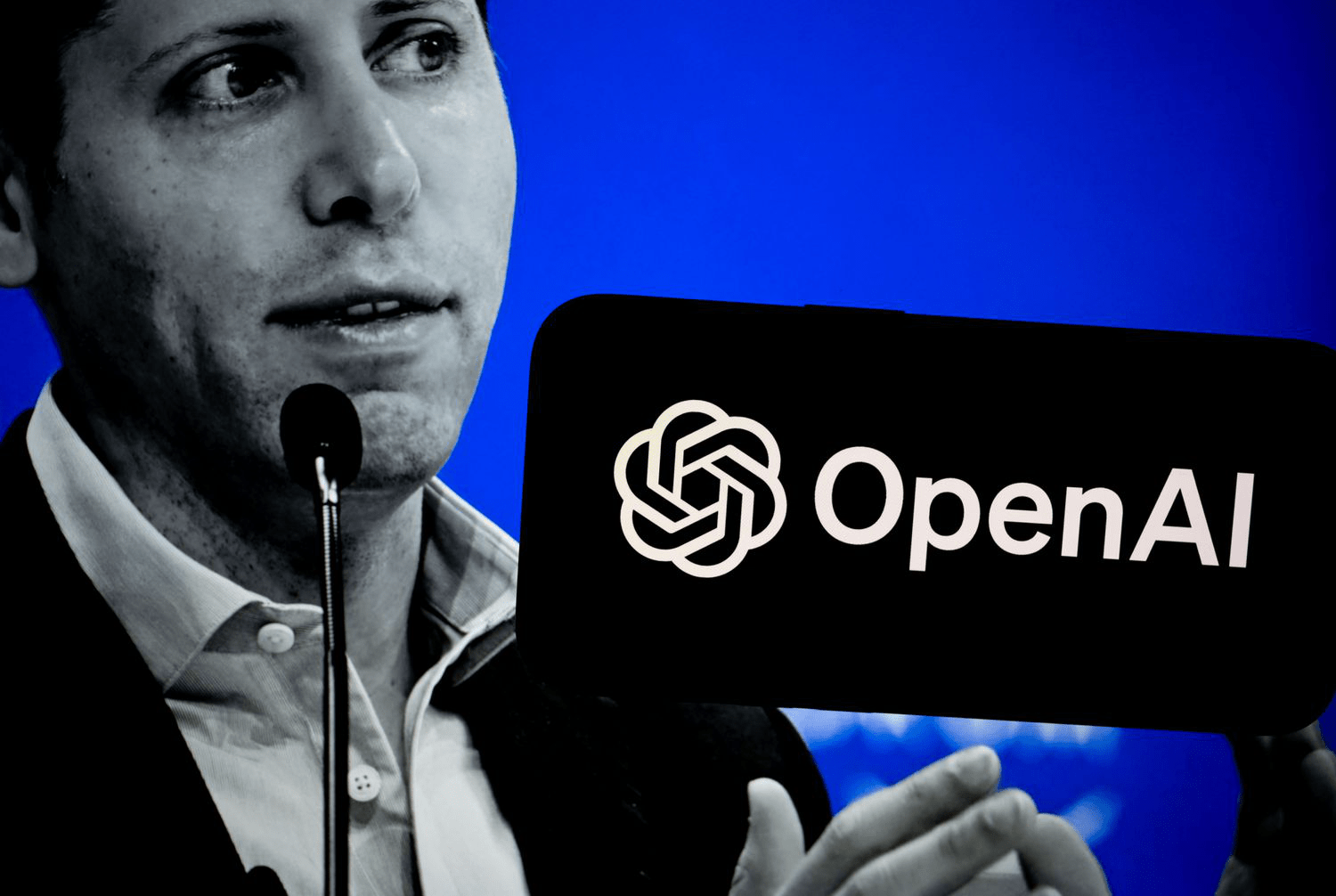

Anthropic and OpenAI have entered groundbreaking agreements with the U.S. Artificial Intelligence Safety Institute to collaboratively enhance AI safety and mitigate emerging risks from advanced AI technologies.

Bill McColl brings over 25 years of expertise as a senior producer and writer across TV, radio, and digital platforms, leading teams to deliver impactful news coverage on significant global events.

Highlights

- Anthropic and OpenAI have formalized partnerships with the U.S. agency dedicated to AI safety to jointly address potential risks posed by cutting-edge AI systems.

- The U.S. Artificial Intelligence Safety Institute emphasizes that these collaborations will drive innovation in safe, reliable, and ethical AI development.

- These agreements mark the first-ever formal collaborations between the U.S. government and private AI firms focused on establishing robust AI safety standards.

Leading AI innovators Anthropic and OpenAI have signed memorandums of understanding with the U.S. Artificial Intelligence Safety Institute, operating under the Department of Commerce’s National Institute of Standards and Technology (NIST). This partnership aims to facilitate structured cooperation in AI safety research, rigorous testing, and comprehensive evaluation.

Announced on Thursday, the initiative represents an unprecedented alliance between federal authorities and industry leaders to promote trustworthy and secure AI advancements accessible to everyone.

The agreements grant the AI Safety Institute privileged access to the companies’ latest AI models both prior to and following their public deployment. This access enables collaborative efforts to assess AI capabilities, identify safety risks, and develop effective mitigation strategies.

Collaborating with UK Counterparts to Enhance AI Safety

Furthermore, the U.S. AI Safety Institute plans to partner with its U.K. equivalent to provide ongoing feedback to Anthropic and OpenAI, fostering continuous improvements in model safety and reliability.

Elizabeth Kelly, Director of the U.S. AI Safety Institute, described these agreements as a pivotal step toward responsibly guiding AI's future, signaling the start of deeper government-industry collaboration.

Jack Clark, co-founder of Anthropic, expressed enthusiasm on the social media platform X about joining forces with the AI Safety Institute, highlighting the critical role of independent third-party testing in the AI ecosystem and applauding governmental efforts to establish dedicated safety institutions.

Have a tip or insight for our reporters? Reach out to us at tips@investopedia.com.

Discover engaging topics and analytical content in Company News as of 27-05-2024. The article titled " Anthropic and OpenAI Forge Landmark AI Safety Partnerships with U.S. Government " provides new insights and practical guidance in the Company News field. Each topic is meticulously analyzed to deliver actionable information to readers.

The topic " Anthropic and OpenAI Forge Landmark AI Safety Partnerships with U.S. Government " helps you make smarter decisions within the Company News category. All topics on our website are unique and offer valuable content for our audience.